--------------------------------------------

i n v e s t i g a t o r s :

Keith Evan Green

Architecture, PI

Ian D. Walker

Robotics Engineering, Co-PI

Johnell Brooks

Human Factors Psychology, Co-PI

--------------------------------------------

f u n d i n g :

U.S. National Science Foundation (under review)

--------------------------------------------

t e a m :

Stan Healy

Healthcare Administration, GHS

William Logan Jr. MD

Gerentology, GHS

--------------------------------------------

a d v i s o r y b o a r d :

Prof. M. Elaine Cress, Ph.D.

Director, Aging Physical Perf. Lab

Department of Kinesiology

University of Georgia

Marjorie George

Program Director

Alzheimer's Association

SC Chapter

Doris J. Gleason

Director, Community Outreach

AARP-SC

Jane M. Rohde, AIA, FIIDA, ACHA, AAHID

Principal and Architect

JSR Associates, Inc.

Senior Living and Healthcare

Ellicott City, MD

--------------------------------------------

o u r h o m e l a b:

View of our home lab that we designed and fabricated. Located within the Roger C. Peace Rehabilitation Hospital of the Greenville Hospital System University Medical Center, the home lab has all the features of a typical studio apartment, including kitchen and dining areas, laundry room, and closet space. View of our home lab that we designed and fabricated. Located within the Roger C. Peace Rehabilitation Hospital of the Greenville Hospital System University Medical Center, the home lab has all the features of a typical studio apartment, including kitchen and dining areas, laundry room, and closet space.

|

|

the home+ vision history

-----------------------------------------------------------------------------------------------------------

overview

As healthcare is becoming more digital, technological and increasingly costly, healthcare environments have yet to become embedded with digital technologies to support the most productive (physical) interaction across patients, clinicians, and the physical artifacts that surround and envelop them. This shortcoming motivated our larger team to envision home+, a suite of networked, distributed “robotic furnishings” integrated into existing domestic environments (for aging in place) and healthcare facilities (for clinical care).

We have initially developed a working prototype of the Assistive Robotic Table (ART) with support from NSF's Smart Health & Wellbeing Program (SHB,now SCH). Before and during the development of ART, our team worked on realizing the larger ambition for home+ for which ART is a key component. The history of our early home+ effort is presented below, and includes foci on machine learning, perceptual systems, mobile robots, and an impressive number of robotic furnishings.

-----------------------------------------------------------------------------------------------------------

initial concept

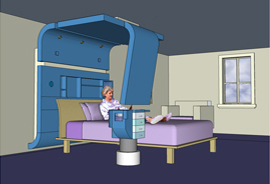

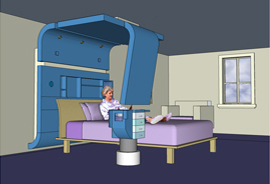

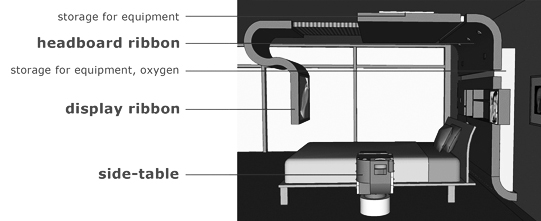

home+ | 2009 start home+ | 2009 start

Conceived by the three investigators - Green, Walker, and Brooks - working together, this is our first home+ concept - "comforTABLE" - developed and communicated in digital simulation only.

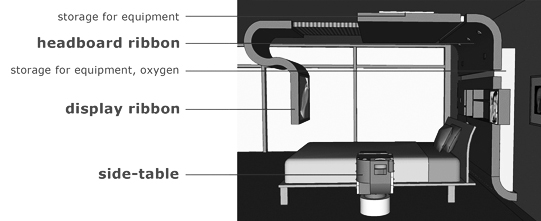

The key components of the first home+ are:

• The headboard ribbon, a robotic surface stated on the back wall above the shoulders, which twists to adjust for lighting and audio levels.

• The display ribbon, a novel, continuum robotic surface stated overhead, that slightly twists and contracts/elongates to allow the most comfortable interaction with its embedded touch display screen for work and leisure activities.

• The side-table can raise, lower, and rotate about its base to make its various components and surfaces more accessible to the user.

A presented in the 2x3 cell of still images above, we envisioned home+ supporting individuals of all ages and mental and physical capacities, at home and in clinic.

The first home+ (aka comforTABLE) | key components:

Our early paper for HRI introducing this first home+ concept:

K.E.Green, I.D. Walker, J. O Brooks and W.C. Logan, Jr. comforTABLE: A Robotic Environment for Aging in Place, late-breaking paper, HRI’09, the IEEE/ACM International Conference of Human-Robot Interaction, March 11–13, 2009, La Jolla, California, USA.

-----------------------------------------------------------------------------------------------------------

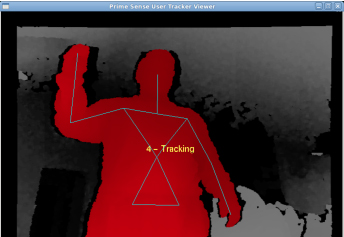

+ perceptual system (sensors with camera)

home+ | 2009 home+ | 2009

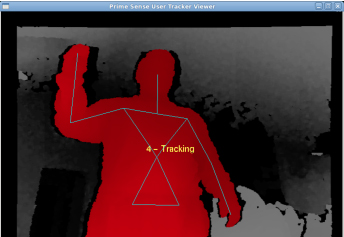

The 2009 home+ prototypes featured a perceptual system (multiple sensors including a ceiling-mounted camera) to control an intelligent, interactive, mobile nightstand (above) to aid the elderly in their homes.

We integrated three different sensing modalities - physical, tactile and visual - for controlling the navigation of the mobile and robotic nightstand within the constrained environment of a general purpose living room housing a single aging individual in need of assistance and monitoring.

A camera mounted on the ceiling of the room gives a top-down view of the obstacles, the inhabitant/user and the nightstand. Pressure sensors mounted beneath the bed and sofa provide physical perception of the person's state (video; document). This video shows how data collected from the force sensors mounted beneath the sofa captures patterns of human behavior, which then serve as inputs for controlling the mobile nightstand.

A proximity IR sensor on the nightstand acts as a tactile interface along with a Wii Nunchuck to control mundane operations on the nightstand.

Intelligence from these three modalities are combined to enable path planning for the nightstand to approach the individual. With growing emphasis on assistive technology for the aging individuals who are increasingly electing to stay in their homes, we showed how ubiquitous intelligence can be brought inside homes to help monitor and provide care to an individual. Our approach went one step forward in achieving pervasive intelligence by seamlessly integrating different sensors embedded in the fabric of the environment.

• mobile nightstand

its lazy susan mechanism shown below (video)

In addition to this fully-functioning, full-scale nightstand prototype, we also prototyped several full-functioning, robotic furnishing (to-scale and full-scale)...

Shown below:

• intelligent lounge chair video; documents

• interactive mobile table video; documents

• intelligent media center video; documents

Shown Below:

• ceiling-mounted, fetching robot documents

Our unpublished paper on the perceptual processes of our home+ concept:

V.N. Murali, A L. Threatt,... J O. Brooks, I.D. Walker, and K E. Green.(October 2013). A Mobile Robotic Personal Nightstand with Integrated Perceptual Processes.

-----------------------------------------------------------------------------------------------------------

+ artificial intelligence

+ continuum robotic worksurface

home+ | 2010 home+ | 2010

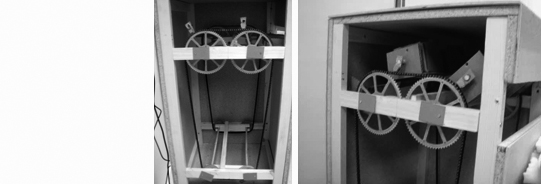

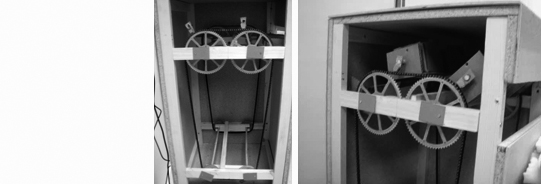

The 2010 home+ focussed on equipping a key component of home+, the nightstand/over-the-bed table, with artificial intelligence capable of predicting the needs of the user and subsequently tailoring its actions (see documents) based on a reward from the user - here, in the form of a gentle stroke - a"pet" (video).

The nightstand-table itself was iterated from the previous year's version, adding (1.) a continuum robotic worksurface, actuated by pneumatic muscles, that senses the activity of a user and responds accordingly; and (2.) a smart storage mechanism that collects and delivers objects at the request of the user. The fully-functioning, full-scale prototype successfully implemented each of these functions.

This video present the full system of furnishings operating in the context of a user's daily routine.

• interactive storage mechanism

(image below; see documents link above)

----------------------------------------------------------------------------------------------------------- home+ | 2011 home+ | 2011

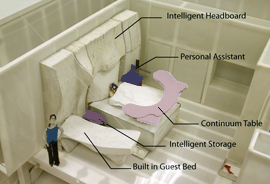

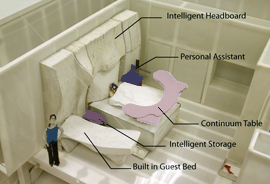

The 2011 home+ focussed on prototyping to-scale (1:6) a robotic, hospital patient-room ecosystem. (See figure above; video).

We developed this home+ with the help of clinicians at the Roger C. Peace Rehabilitation Hospital of the Greenville Hospital System University Medical Center - our partners for ART and home+.

This early prototyping effort represents our vision for the larger robotic patient room (beyond its home application), and identifies opportunities for more focused work on an Assistive Robotic Table (ART).

Along with the to-scale prototype shown above, we also created full-scale, fully-functioning interactive and/or intelligent components:

• intelligent headboard video; documents

• touch-sensitive storage units video; documents

• mobile fetching robot video; documents

• morphing table with vacuum actuation video

A video with all these components operating as home+ is found here.

Our paper for IROS presenting home+ 2011 (See also video 1 and video 2.)

Threatt, A. L., Merino, J., Green, K.E., Walker, I.D., Brooks, J. O. et. al. A Vision of the

Patient Room as an Architectural Robotic Ecosystem. Video (IEEE archival) in Threatt, Proceedings of IROS 2012: the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Algarve, Portugal, October 2012.

----------------------------------------------------------------------------------------------------------- ART | 2011-15 ART | 2011-15

Beginning 2011, with NSF funding (SHB, now SCH), we developed the nightstand of home+ that we named the Assistive, Robotic Table [ART]. ART was co-designed with the clinicians of the Roger C. Peace Rehabilitation Hospital, the Greenville Hospital System University Medical Center - our partners.

ART is the hybrid of a typical nightstand found in homes, and the over-the-bed table found in hospital rooms; it features a plug-in "continuum robotic" surface that supports rehabilitation.

We envision that ART and the other components of the home+ vision recognize, communicate with, and partly remember each other in interaction with human users. (See CHI 2014's Video Preview and our ART project page)

-----------------------------------------------------------------------------------------------------------

+ nonverbal (audio-visual) communication

+ gesture-learning interface

NVC | 2011-15 NVC | 2011-15

We have been developing an appropriate and effective nonverbal communication (NVC) platform for robots, communicating with people; a mode of communication that dignifies what it is to be human by not competing with us, nor imposing on our social-emotional-cognitive constitution. We have initially developed a working prototype integrated wtih ART with support from NSF's Smart Health & Wellbeing Program. We envision such a system working across home+.

Our non-verbal communication (NVC) is conveyed by the familiar means of Audio-Visual Communication (AVC): low-cost lighting (colors, patterns) and sounds. The NVC we designed is based on an understanding of cognitive, perceptual processes of non-verbal communication in humans.

The NVC, in turn, affords a communicative dialogue (i.e. acknowledging requests, or providing requested feedback) that conveys the purpose of accomplishing tasks.

Our employment of learning (Growing Neural Gas) algorithms offers both user and robot the capacity to interrupt, query, and correct the dialogue, and conveys in the robot some semblance of emotional information (e.g. urgency, respect, frustration) at a level that is not disconcerting to the user in a way that a user might misconstrue as human.

Our paper for IEEE Transactions on our Gesture Leaerning Interface:

Yanik, P.M., Merino, J., Threatt, A.L., Manganelli, J., Brooks, J.O., Green, K.E. and Walker, I.D. “A Gesture Learning Interface for Simulated Robot Path Shaping with a Human Teacher.” IEEE Transactions on Human Machine Systems, 44(1): 41–54, 2014. (See our NVC project page.)

|

|

home+ |

home+ |

home+ |

home+ |

home+ |

home+ |

home+ |

home+ |